Australia’s budget austerity means the Australian Research Council (ARC) might freeze or put on hold all competitive grant funding rounds and awards. Australian academics are upset.

For two decades the ARC has been one of Australia’s most important funding agencies for competitive research grants. ARC funding success provides career prestige and national visibility for an academic researcher or collaborative research team. The ARC’s Discovery and Linkage rounds have a success rate of 17-19% whilst the DECRA awards for Early Career Researchers (ECR), or first five years after PhD completion, has been 12%. An ARC grant is a criterion that many promotions committees use for Associate Professor and Professor positions. This competitiveness means that a successful ARC grant can take a year to write. The publications track record needed for successful applicants can take five to seven years to develop.

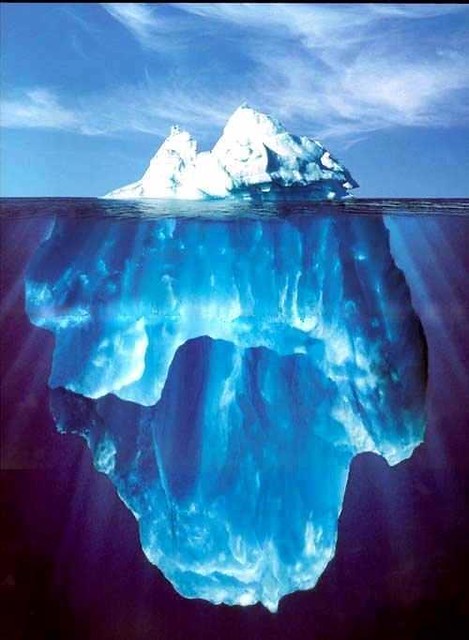

The ARC’s freeze decision is symptomatic of a deeper sea change in Australian research management: the rise of ‘high finance’ decision-making more akin to private equity and asset management firms.

Richard Hil’s recent book Whackademia evoked the traditional, scholarly Ivory Tower that I remember falling apart during my undergraduate years at Melbourne’s La Trobe University. Hil’s experience fits a Keynesian economic model of universities. Academics got tenure for life or switched universities rarely. There was no ‘publish or perish’ pressure. There was a more collegial atmosphere with smaller class sizes. Performance metrics, national journal rankings, and research incentive schemes did not exist. The “life of the mind” was enough. Universities invested in academics for the long-term because they had a 20-30 year time horizon for research careers to mature. There was no intellectual property strategy to protect academics’ research or to create different revenue streams.

‘High finance’ decision-making creates a different university environment to Hil’s Keynesian Ivory Tower. Senior managers believe they face a hyper-competitive, volatile environment of disruptive, low-cost challengers. This strategic thinking convinced University of Virginia’s board chair Helen Dragas to lead a failed, internal coup d’etat against president Teresa Sullivan with the support of hedge fund maven Paul Tudor Jones III. The same thinking shapes the cost reduction initiatives underway at many Australian universities. It creates a lucrative consulting market in higher education for management consulting firms. It influences journalists who often take the public statements made at face value instead of doing more skeptical, investigative work.

The ARC has played a pivotal role in the sectoral change unfolding in higher education. Its journal rankings scheme the Excellence for Research in Australia (ERA) provided the impetus for initial organisational reforms and for the dominance of superstar economics in academic research careers. ERA empowered research administrators to learn from GE’s Jack Welch and to do forced rankings of academics based on their past research performance. ARC competitive grants and other Category 1 funding became vital for research budgets. Hil’s professoriate are now expected to mentor younger, ECR academics and to be ‘rain-makers’ who bring in grant funding and other research income sources. Academics’ reaction to the ARC’s freeze decision highlights that the Keynesian Ivory Tower has shaky foundations.

The make-or-buy decision in ‘high finance’ changes everything. Hil’s Ivory Tower was like a Classical Hollywood film studio or a traditional record company: invest upfront in talent for a long-term payoff. Combining ERA’s forced rankings of academic staff with capital budgeting and valuation techniques creates a world that is closer to private equity or venture capital ‘screening’ of firms. Why have a 20-to-30 time-frame for an academic research career when you can buy-in the expertise from other research teams? Or handle current staff using short-term contracts and ‘up or out’ attrition? Or your strategy might change in several years to deal with a volatile market environment? Entire academic careers can now be modeled using Microsoft Excel and Business Analyst workflow models as a stream of timed cash-flows from publications, competitive grants, and other sources. Resource allocative decisions can then be considered. ARC competitive grants and research quality are still important — but ‘high finance’ decision-making has changed research management in universities, forever.

Today’s young academics face a future that may be more like auteur film directors or indie musicians: lean, independent, and self-financing.

Photo: missdarlene/Flickr.